Fine-tuning large language models: A primer

Companies are eager to leverage the benefits generative AI (GenAI) offers. Implementing the technology, however, isn't a 'plug-and-play' process. Instead, it hinges on a critical task — fine-tuning a pretrained large language model (LLM) to be a specialist at your intended domain or application.

Successful GenAI implementation in any organization requires a phased approach that begins with establishing a strong foundation of high-quality fine-tuning data. This involves curating extensive datasets that are highly relevant to the intended application. From our experience working with frontier AI model builders, we've identified four key characteristics that are essential for optimal training data.

- Relevance: Data must be directly aligned with the intended task or domain. For example, lidar data is valuable for training advanced driver assistance systems in vehicles, but is not useful for training facial recognition software.

- Quality: Data should be accurate, consistent and free from noise, errors or biases. A large dataset filled with irrelevant or incomplete data won't help train a model to perform optimally and accurately. A smaller, high-quality dataset can outperform a larger, low-quality one.

- Quantity: The required volume of data for effective model training varies based on factors like model complexity, task difficulty and the intended application. More complex tasks often require larger, high-quality datasets to ensure reliable learning and generalization.

- Diversity: A wide range of data points is crucial to enhance model generalization and prevent overfitting, which occurs when the model performs well on the training data, but not on real-world data.

By investing in the creation and curation of high-quality data for generative AI, organizations can significantly enhance the performance of their GenAI models. Explore how developers can leverage a fine-tuning approach to improve a model's performance on targeted tasks without the need for extensive retraining.

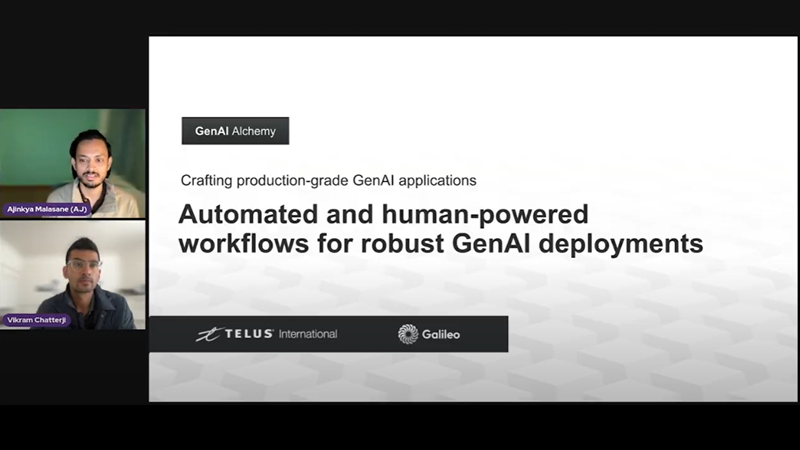

Crafting production-grade GenAI applications

Join Ajinkya Malasane, senior director, product strategy and marketing for TELUS Digital (formerly TELUS International), and Vikram Chatterji, co-founder and CEO of Galileo, a generative AI (GenAI) evaluation company, as they provide efficient and effective ways to solve key GenAI project blockers for easier deployments.

What is fine-tuning?

Fine-tuning adapts a pretrained LLM to a specific task by further training the model. To do so, you'll need a labeled, targeted dataset that conveys the specific domain knowledge, style or use case relevant to what you want the model to achieve.

For example, to train a model to produce accurate code snippets as responses, it needs to be fine-tuned on advanced coding datasets. These datasets must be created, reviewed and validated by experts with experience in relevant coding languages. By aligning the model to particular business objectives, fine-tuning enables that model to grasp the nuances of these tasks and excel in domain-specific applications.

How does fine-tuning work?

GenAI models learn through trial and error, motivated by strong incentives to succeed.

Through reinforcement learning, foundational models emerge as generalist productivity assistants — models capable of handling a broad spectrum of tasks with impressive versatility but lacking the expertise required for highly-specialized applications. Transforming models for real-world use cases requires a focused fine-tuning approach to adapt the pretrained model to perform better in a particular task or domain.

Large language model fine-tuning methods

There are a variety of techniques for fine-tuning LLMs, including supervised/unsupervised fine-tuning, reinforcement learning from human feedback (RLHF), prompt engineering and retrieval augmented generation (RAG). While they are all distinctive methods, each is important to the overall fine-tuning process.

Unsupervised versus supervised fine-tuning

Unsupervised fine-tuning focuses on training models using large volumes of unlabeled data. While this approach builds strong general language capabilities, it often lacks the precision required for more specialized tasks like summarization and classification.

Supervised fine-tuning (SFT), on the other hand, is used to prime a model to generate responses that align with specific business objectives. This technique uses labeled, targeted datasets, significantly reducing instances of errors like hallucinations and toxic content.

In supervised fine-tuning, human experts create labeled examples to demonstrate how to respond to prompts for different use cases like question-answering, summarization or translation. SFT can be approached in two ways: full parameter fine-tuning (full FT) or parameter-efficient fine-tuning (PEFT).

- Full FT: All model parameters are trained on new data tailored to the target task. This approach requires a large, distinct dataset containing around 10,000 to 100,000 prompt-response pairs (PRPs). By training the entire model on the task-specific data, full FT can result in a more significant adaptation of the model to that particular task, potentially resulting in more accurate performance. Full FT requires substantial computational resources and time.

- PEFT: With this approach, only some of the model's parameters are fine-tuned on the specific task and the dataset is kept to between 1,000 to 10,000 PRPs. This approach is resource-efficient and helps maintain the LLM's core knowledge while enhancing task-specific performance.

The choice between these methods depends on the desired model performance, the amount of training data available and compute cost considerations.

An example of providing responses to enhance model performance on the TELUS Digital platform, Fine-Tune Studio.Reinforcement learning with human feedback

Rather than relying on predefined objectives like traditional reinforcement learning, RLHF mimics human learning by capturing nuance and subjectivity through human feedback.

The process begins with training a model that translates human preferences into a numerical reward signal. By providing the model with ample direct feedback from human evaluators, it learns to replicate how humans assign rewards to different model responses, allowing training to continue offline without human involvement. RLHF combines the strengths of human intuition with machine efficiency, resulting in AI that better understands and meets user needs.

The RLHF rating system typically involves comparing human feedback on different model outputs, such as having users compare two LLM responses to the same prompt. Humans rank these outputs based on their relative favorability, while also identifying flaws in each response. This feedback is aggregated into weighted quality scores, which are normalized into a reward signal to guide reward model training.

RLHF does carry risks of overfitting and bias. This is especially true if the feedback comes from a narrow demographic, which can potentially lead to performance issues when the model is used by different groups. As a result, RLHF requires diverse datasets that reflect a wide range of human interactions and preferences. Additionally, to ensure the model accurately interprets and responds to human feedback, comprehensive human-in-the-loop evaluations are essential.

Using Fine-Tune Studio to rate responses for RLHF.Prompt engineering

In addition to RLHF and domain-specific fine-tuning, prompt engineering is important for aligning model responses with brand guidelines and tone. Structured input prompts guide the model, enabling it to generate relevant and accurate responses with minimal training data. This is particularly useful for applications like chatbots, question-answering systems and content generation. By crafting prompts carefully, organizations can instill specific brand attributes into model outputs.

For example, consider a hypothetical educational technology company that specializes in training users for English-language proficiency tests like the international English language testing system (IELTS). The company wants to be perceived as capable in delivering expert guidance, personalized learning paths and helping users achieve high English proficiency. A user might inquire about learning a new language like French, a query that doesn't directly align with the company's core focus.

User query: "How can I learn French?"

Without proper context, the model might suggest general language learning applications or methods that don't align with the company's expertise in English-proficiency exam preparation. To ensure the response remains relevant to the brand's core offerings, a carefully constructed default prompt can guide the model's response.

Default prompt: "You are the company's AI assistant, providing expert guidance in English language proficiency exams like IELTS. Your responses should focus on helping users with structured, exam-oriented learning paths. If a query falls outside of exam training, politely direct the user to relevant resources while maintaining focus on English-language proficiency."

By adhering to this default prompt, the model might respond with something like the following:

AI assistant: "While our platform is primarily focused on IELTS preparation, learning a new language like French can complement your language proficiency. For structured learning, we recommend starting with foundational grammar and vocabulary, just as we do in IELTS training. Would you like to access resources on how to integrate French learning into your exam preparation or explore specific courses we offer to enhance language skills?"

This response provides helpful advice while keeping the conversation aligned with the company's focus.

Retrieval-augmented generation

This technique combines retrieval mechanisms with generative-base AI models to enhance the accuracy of content creation and information synthesis. It does this by retrieving contextually relevant information from external sources such as proprietary databases, web search results, document repositories or industry-specific application program interfaces (APIs).

Fine-tuning these models involves using two types of ground-truth datasets composed of validated and verified data that is considered the 'true' data on which the model needs to learn. Both are vital for optimizing RAG models to produce high-quality, contextually appropriate content.

- End-to-end synthesis rating assesses both the retrieval and summarization processes, ensuring that the synthesized content is accurate and relevant to the context.

- Retrieval context recall is used to evaluate the accuracy of the retrieval model, often requiring a human workforce to validate the relevance and precision of the retrieved data.

Even when your LLM has been fine-tuned to task-specific requirements, the journey doesn't end there. Deploying GenAI systems with confidence requires systematic evaluation, improvement and monitoring for performance, safety and reliability.

Evaluate, adjust and scale model performance

LLM capabilities are generally evaluated through pairwise comparison, where two responses to a single question are evaluated to determine which is preferred, and single-answer grading, where a score is assigned to a response. These methods assess how well the model reproduces the linguistic patterns it has already learned. However, these evaluations often fail to measure more advanced reasoning, creativity or problem-solving capabilities that require the model to go beyond replicating pretraining data and generalize to unseen or novel situations.

Evaluating your fine-tuned LLM's performance on an unseen dataset against user-defined benchmarks and objectives ensures the model has learned the desired patterns. It also helps to confirm that the LLM can adapt to the domain-specific requirements of different users and is better aligned with the businesses you serve.

You can use various metric scores to assess the performance of the model, such as:

- The bilingual evaluation understudy (BLEU) score to evaluate text generation quality.

- Recall-oriented understudy for gisting evaluation (ROUGE) score to evaluate the model's summarization capabilities by comparing overlap between generated and reference texts.

- Human evaluation to assess qualitative aspects of the model's output.

These assessments can then be used to guide the development and improvement of LLMs, while helping to ensure the models are useful, safe and effective for your users.

Beyond the aforementioned assessment methods, the model-in-the-loop approach is becoming increasingly popular. In this method, models themselves are used to provide preliminary evaluations of AI outputs, which is proving to be faster and more cost-effective than human-only evaluations over large datasets. While leveraging advanced AI models as evaluators is appealing, the associated costs, latency and privacy concerns render it impractical for many production environments. Similarly, when use cases are complex or high risk, nothing beats having a human-in-the-loop approach. Relying solely on human judgment, however, can be resource-intensive and difficult to scale.

In our evaluations, we find that a hybrid approach — combining human expertise with automated methods — offers a practical solution. Additionally, establishing efficient workflows for human feedback and incorporating metric-driven insights into the evaluation process is crucial for optimizing model performance.

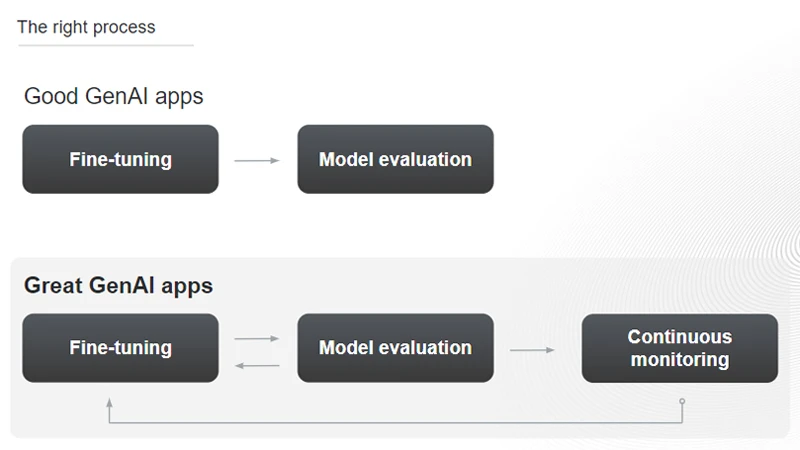

Commit to continuous monitoring: Fine-tune, iterate, repeat

Large language models are probabilistic and autoregressive by design, meaning they generate text by predicting the next word based on the probability distribution of previous words. Since these predictions are not deterministic and can vary with each iteration, they may produce errors, inconsistencies or contextually inappropriate outputs over time. As a result, continuous monitoring is necessary to ensure the model maintains coherence, accuracy and relevance, especially in real-time applications or critical decision-making environments.

Given the wide variety of fine-tuning techniques, achieving ideal model performance often requires multiple iterations of training strategies and setups. For example, you'll need to adjust datasets and hyperparameters like batch size, learning rate and regularization terms. This process should continue until you reach a satisfactory outcome that's aligned to the metrics most relevant to your use case.

Beyond this, you must continuously monitor production traffic to surface quality metrics, issues and alerts, and detect anomalies (for example, prompts that are not covered by your evaluation datasets) to add them to your test suite. By doing so, organizations can proactively identify and address issues such as data drift, concept drift and bias and security vulnerabilities to mitigate risks and ensure the continued effectiveness of deployed models.

A diagram that shows the difference in fine-tuning processes between good and great GenAI applications.

A diagram that shows the difference in fine-tuning processes between good and great GenAI applications.Building responsible generative AI together

Large language model issues such as hallucinations can be reduced in frequency and intensity by using high-quality training data and maintaining model transparency by understanding its inner workings and how it makes decisions. However, at this stage in LLM development, it's simply not possible to entirely eliminate hallucinations. That's why ongoing monitoring and iterative improvements are vital for enhancing the reliability and trustworthiness of LLM outputs, and moving closer to desired performance benchmarks.

TELUS Digital's diverse global AI Community plays a crucial role in meeting the rigorous demands of GenAI model alignment and safety. Complementing our human expertise, we have developed state-of-the-art technology to address the challenges of fine-tuning with precision, ensuring that your models achieve the desired performance and safety benchmarks.

For a deeper dive into fine-tuning techniques and best practices, check out our webinar, Crafting production-grade GenAI applications. By watching the recording, you'll also learn how to lighten your GenAI stack by implementing automated and human-powered solutions to address the key challenges of generative AI projects.