A realistic decision framework for your data labeling strategy

Today, it’s common knowledge that we require large volumes of high-quality labeled data to train and validate sophisticated machine learning (ML) models. Despite the fact, my first-hand interactions with hundreds of ML team leaders and C-suite executives of artificial intelligence (AI) start-ups revealed that the market has a skewed understanding of labeled data acquisition strategies. These companies and start-ups span across different phases of the AI maturity curve.

Most ML teams at the higher end of the AI maturity curve consider labeled data acquisition as one of their main priorities, and understand the different layers of complexities involved in the process. However, most innovators at the farther end rely on workarounds for data in the interim. I discovered that almost all ML companies go through a self-realization cycle of ignoring the data annotation aspect of the ML pipeline in the initial stages to eventually building out massive software and operations to tackle their diverse dataset requirements.

Variable factors like machine learning use cases, labeling tools, workforce requirements and cost significantly influence data labeling strategies at various stages of AI innovation. But diverse and high-quality ground truth dataset requirements remain consistent across all applications. Therefore, it’s safe to assume that there is considerable ambiguity regarding effective data collection strategies that are cost-effective and compatible with evolving machine learning needs.

Before I dive into the decision framework, it’s necessary to look at a few myths and oversights that occur while decision-makers build their data labeling strategies.

The inherent over-simplification of the data labeling process

Firstly, over-simplifying the process to a mere hire-annotate-pay model will only build further roadblocks to achieving ML-driven automation goals. The most critical prerequisite for any functional ML model is high-quality labeled training data. And so, a cost compromise will only lead companies further down a rabbit hole.

The traditional labeling budget is the product of a fixed $7 per annotator cost and the number of hours of annotations required. This approach of calculating a labeling budget is substantially inaccurate because it excludes various hidden costs incurred while ML engineers set up the annotation process.

Factors overlooked in the data labeling process

Let’s go through a step-by-step process to understand how labeling budget overshoots the simplified math equation.

Step 1: The ML engineer first creates guidelines to lucidly define the classes of annotations and the expectation set-points for each class/attribute to train human annotators. This step reoccurs a few times because, realistically, nobody gets it right at the first shot; the guidelines require multiple iterations.

Step 2: Next, the ML engineer discovers or builds annotation tools that support their data formats, produces highly-accurate pixel-level annotations and offers scalability to accommodate their large dataset requirements. This evaluation/development process can take a few days or weeks based on the scope of the labeling projects.

Step 3: The ML engineer then finds the required number of skilled human annotators from a third party or they hire part-time annotators. Finding the right workforce requires a well-designed pilot program and evaluation of multiple workforce options, followed by negotiations and contracting (collaboration with finance and legal teams) of the most suitable candidates.

Step 4: Next, the ML engineer sets up the tool infrastructure, trains the annotators on tool usage and provides annotation requirements as tasks. They also create and manage deadlines for annotation tasks. Additionally, they track the progress of the annotators regularly.

Step 5: The ML engineer then sets up workflows, logic or scripts to test the accuracy of the output, provides feedback to defaulters and re-assigns wrong labels for rework. This step duplicates itself for each batch of annotations.

Step 6: They repeat the previous step until the labeled data reaches their desired level of accuracy. The whole process is time-consuming and labor-intensive.

Often, traditional annotation budgets do not include the additional costs of these steps. Actual budgets must account for the time and effort cost of the ML engineer(s) that rounds up to $100/hour or upwards, and the total expenditure for annotators — both of which tally to a 10 times higher cost than $7/hour. Additionally, as the specificity, diversity and volumes of the data to be annotated increase, the complexities of tooling infrastructure, workforce and project management also increase. Efficiently managing these complex processes becomes critical for achieving high-quality outputs.

And truth be told, ML engineers are better off doing what they do best instead of being pulled in different directions to optimize these operational processes.

The essential guide to AI training data

Discover best practices for the sourcing, labeling and analyzing of training data from TELUS Digital, a leading provider of AI data solutions.

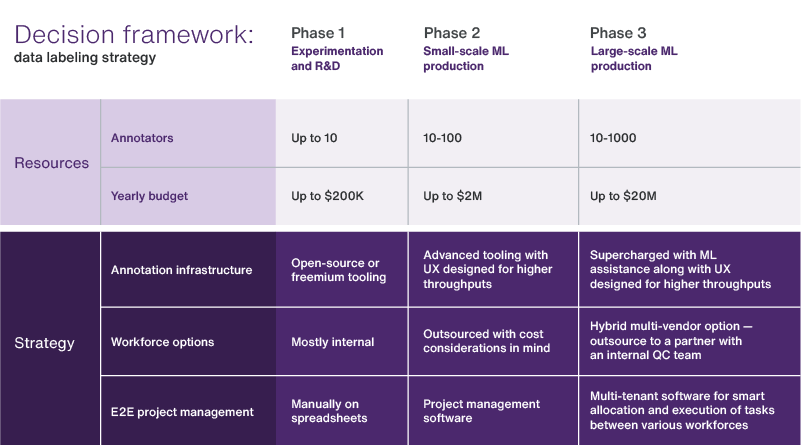

A realistic decision framework for a data labeling strategy

For the widespread application of this framework, I’ve used the three phases of the AI development cycle and three main data labeling functions as the basis for decision-making. Along with explaining the sophistication levels of the functions in each phase of the development cycle, the framework also provides the ballpark budgets to simplify category assessment for decision-makers.

I’ve already established the complexity of the data labeling setup process and the reality of the budgets involved in data labeling. Let us now dive into the functions:

- Annotation infrastructure

- Workforce options

- End-to-end (E2E) project management

The sophistication of these functions depends on the volume of the data collected for annotations and the number of human annotators required. For example, depending on the data volume, the annotation tooling can be 100% manual or very sophisticated with various auto-validation checks or ML assistance to minimize human errors and ensure higher data throughputs.

The workforce can be 100% internally-sourced or outsourced to low-cost countries, depending on data volumes selected for annotations. A hybrid multi-vendor option is more useful when annotator requirements exceed 1000.

Efficient project management of 10s vs. 1000s of human annotators produces very different problems. The former does not require any complex project management, but the latter requires sophisticated software to train annotators, allocate labeling tasks, track performance on the accuracy, speed, consistency and more. It is very similar to monitoring an assembly line.

Leverage TELUS Digital’s AI Data Solutions

This decision framework is compatible with the requirements of most ML teams. However, other critical factors like data security and automation influence labeling strategies, adding an extra layer of complexity to this otherwise simple framework.

Based on this realistic framework, TELUS Digital has built a sophisticated and secure suite of annotation infrastructure and workforce solutions to help small, mid-sized and large companies seamlessly power their data pipelines for the faster development of AI applications.

I hope this framework will help the industry players avoid pitfalls that come with incorrect data labeling architecture decisions due to common misbeliefs, helping the ML community build highly accurate AI models faster than ever.

Discover our AI training platform and contact us to unlock best-in-class labeling solutions for your ML initiatives.